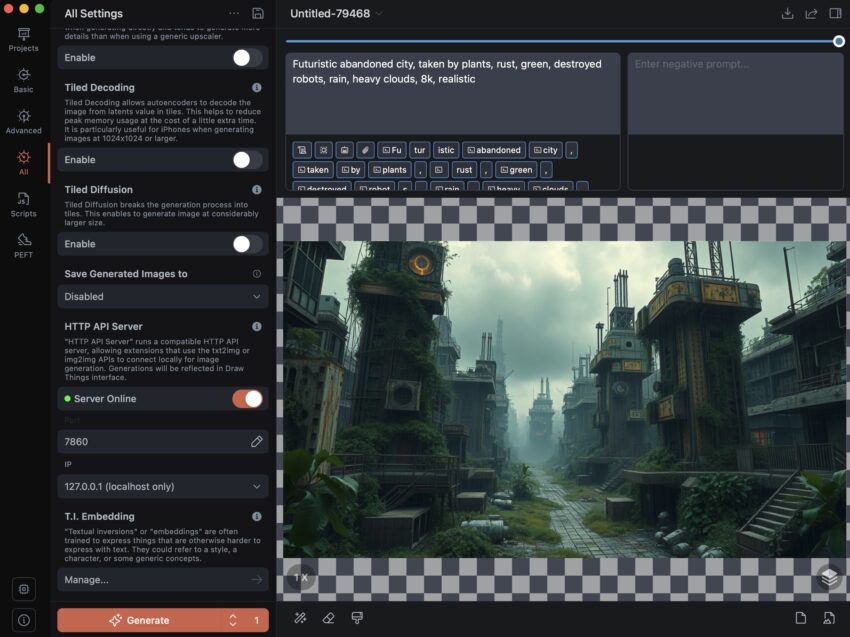

Today, I will take a deeper look at the Draw Things Web API. I couldn’t find documentation for it, but some people have already shared messages, so I haven’t had to check the Discord channel yet.

I can see that there are two useful endpoints for text-to-image generation. Of course, there are also others very similar for image-to-image, and possibly for training LORAs, but I’m not using them in my work yet.

GET http://localhost:7860/ – This will return the current setup in Draw Things as JSON, so we can retrieve information about what is configured.

POST http://localhost:7860/sdapi/v1/txt2img with payload like:

{

"height": 576,

"width": 1024,

"seed": -1,

"prompt": "Futuristic abandoned city, taken by plants, rust, green, destroyed robots, rain, heavy clouds, 8k, realistic",

"negative_prompt": ""

}This will execute the prompt on the currently selected model (e.g., flux_1_schnell_q5p.ckpt), sampler, and other settings in the Draw Things UI. The response will contain an object with an array of images in Base64 format, which we can save or send to another destination.

At the moment, I’m curious if I can get just the image file location, as I still need it on my local disk and converted to WebP. Of course, I can handle this in my API as well, but if it’s already possible, there’s no need to reinvent the wheel.

Edit: i can also look at https://github.com/drawthingsai/draw-things-community maybe I can follow Swift code, or I will find detailed documentation.