Models

Models keep information about existing models, allow us to modify them by adding functions, tools, system prompts, etc., or even create custom models on existing LLMs like Llama 3.x. It’s worth noting that it’s not the same as fine-tuning a model, but just its configuration – we can, e.g. create a model which will respond as a senior Java developer, in short answers without unnecessary explanations.

Tools

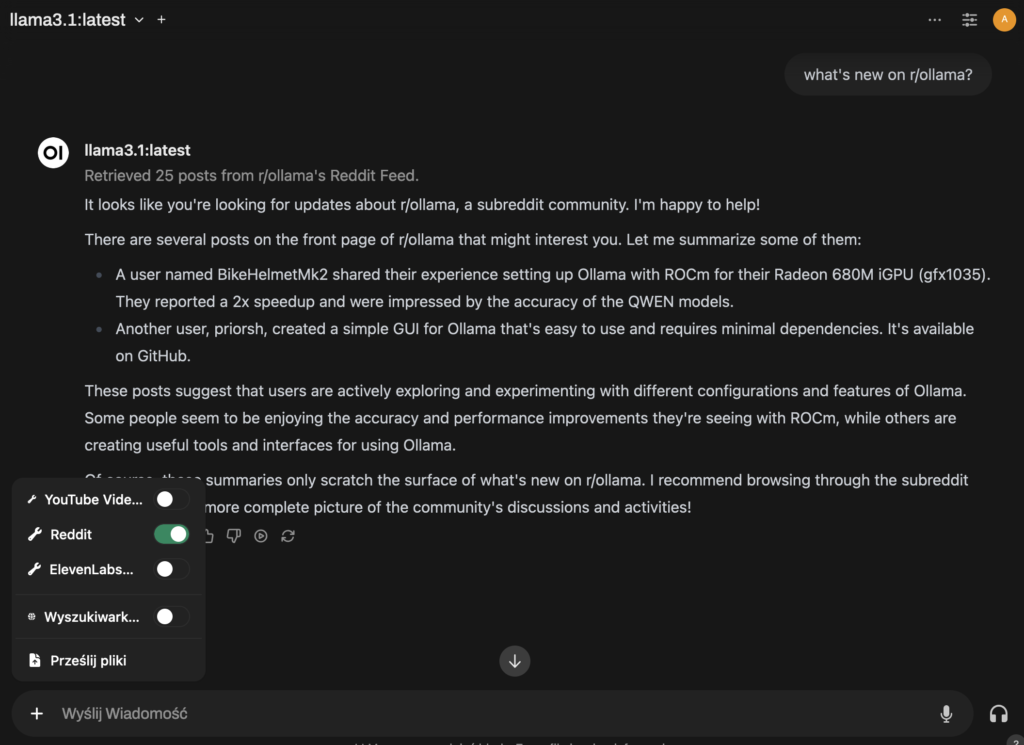

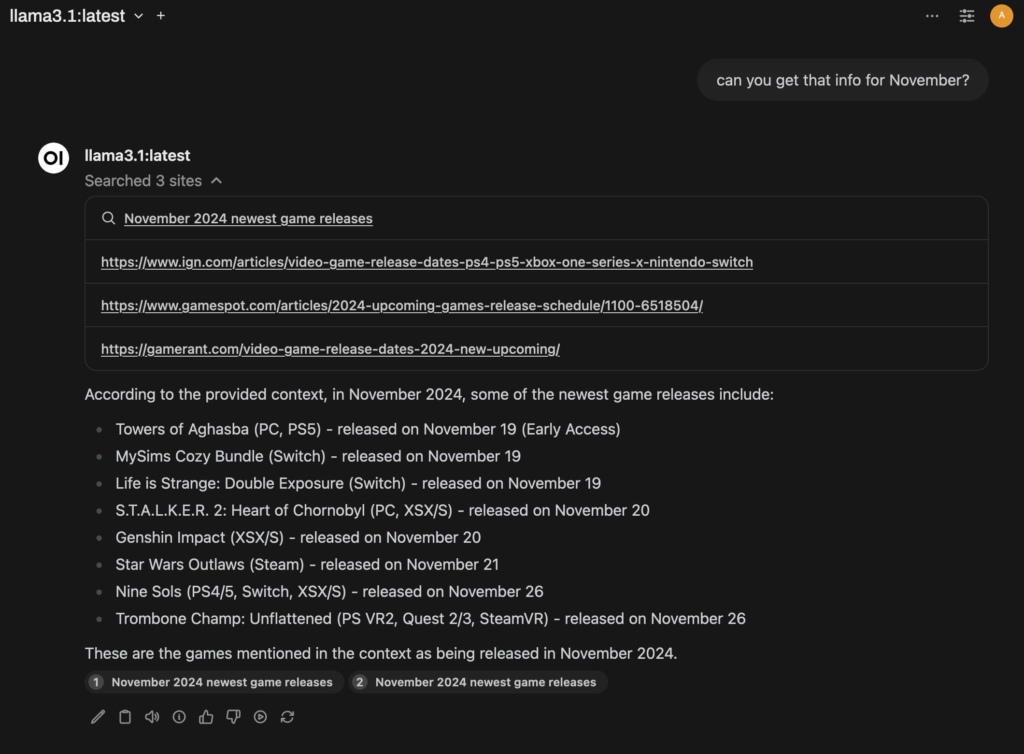

In OpenWebUI, we can use tools from its community – there is already a bunch of tools available, e.g.:

– search engines querying and retrieval

– Reddit gets data about users or newest threads in subreddits

– summarizing of YouTube videos by English transcribes

– we can generate TTS from given texts or images (ElevenLabs, Flux, …)

We can obtain tools from https://openwebui.com/tools after registration, we need only add API url to local setup, and save each Tool after reviewing it in local OpenWebUI.

With Ollama, we can do it completely locally, using different models (of course, if the model is OS, and web searches are accessed via API). Of course we can use other APIs including paid ones.

Functions

Functions are added to models and they can be e.g. inlet and outlet filters, actions or pipelines. They need to be enabled in Functions tab and added to model, then they are applied automatically.

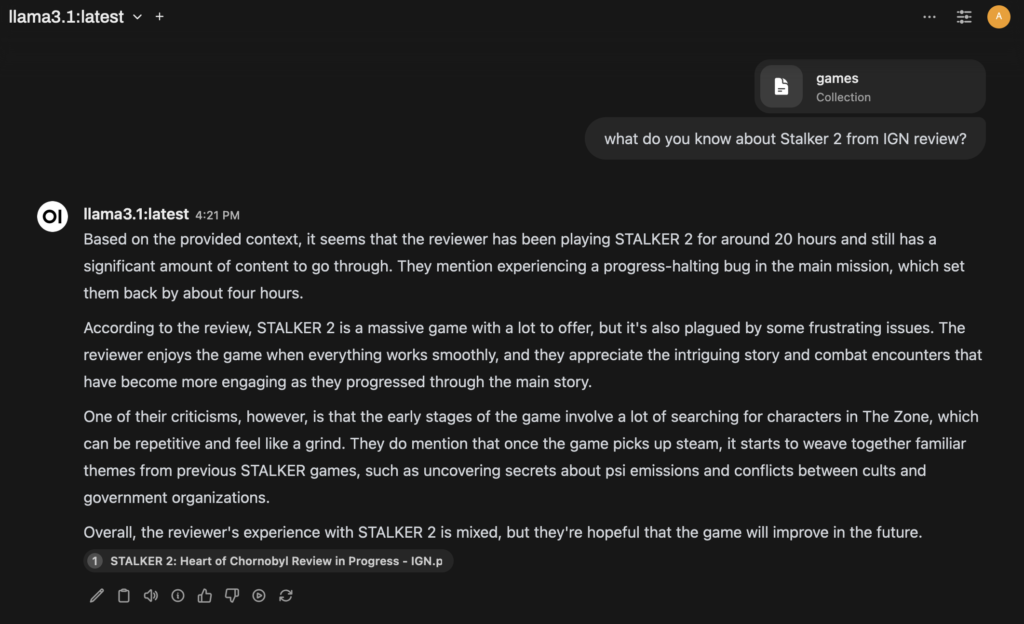

Knowledge

Knowledge simply imports documents into a knowledge base, which can be later attached to the model or provided as a document with a number. Documents are imported to a vector db, so with larger documents, it can take some time, especially if the model uses vision – in my example, I just created a PDF from some website and attached it to a collection. It works very well, and our documents aren’t shared with other companies.

You can also take a look at this tutorial: