For a question about using Ollama in the same manner as o1 works (i.e., returning the Whole or Diff format ready for merging), the repository owner provided the following response:

Ollama models don’t really work with diff unfortunately. Whole you want something with at least 14b params

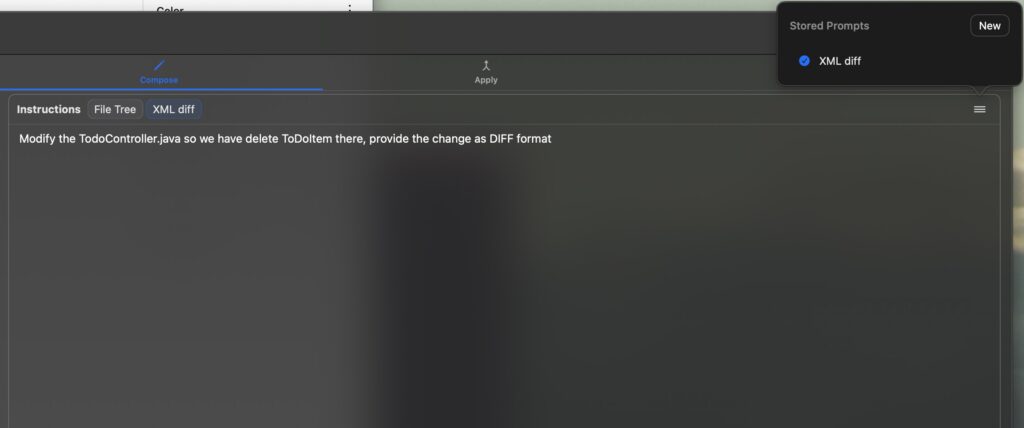

However, for instance, deepseek-coder-v2:16b-lite-instruct-q4_0 still doesn’t work for me. So, I attempted to resolve it in the manner Claude Sonnet 3.5 is used in the Apply tab of Repo Prompt. I created a system prompt attached to Instructions in Compose:

Prompt itself looks like (it’s not covering the everything, it only works for me with llama3.1, codellama ignores it):

Response format as XML diff:

<Plan>

<!-- What is planned to be done -->

</Plan>

<file path="filename" action="modify">

<change>

<description><!-- Modification description --></description>

<search>

```

code to be replaced

```

</search>

<content>

```

replacement

```

</content>

</change>

</file>

Rules:

- you can have as many `change` tags under `file` as changes

- you can have as many `file` as siblings

- in code blocks do not replace `<` and `>` with entities, use just < and > where required

- do not add anything else between tags

- if you need to create new file create new filename and change `action="modify"` to `action="create"`

Example:

<Plan>

I'll add a rename endpoint in Controller and ...

</Plan>

<file path="backend/src/main/java/com/example/Controller.java" action="modify">

<change>

<description>Rename endpoint in Controller</description>

<search>

```

@PutMapping("/reorder")

```

</search>

<content>

```

@PostMapping("/reorderng-new")

```

</content>

</change>

</file>

<Plan>

I'll add field in Item ...

</Plan>

<file path="backend/src/main/java/com/example/Item.java" action="modify">

<change>

<description>Add field firstName in Item</description>

<search>

```

private Map map;

```

</search>

<content>

```

private Map map;

private String firstName;

```

</content>

</change>

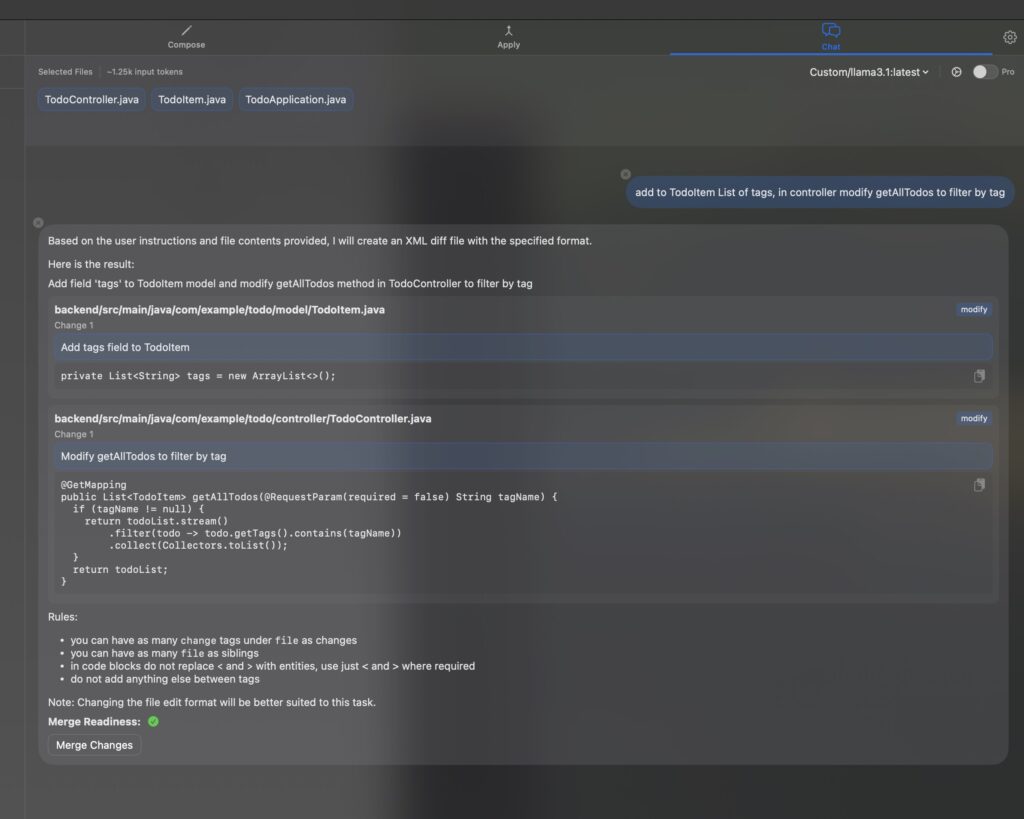

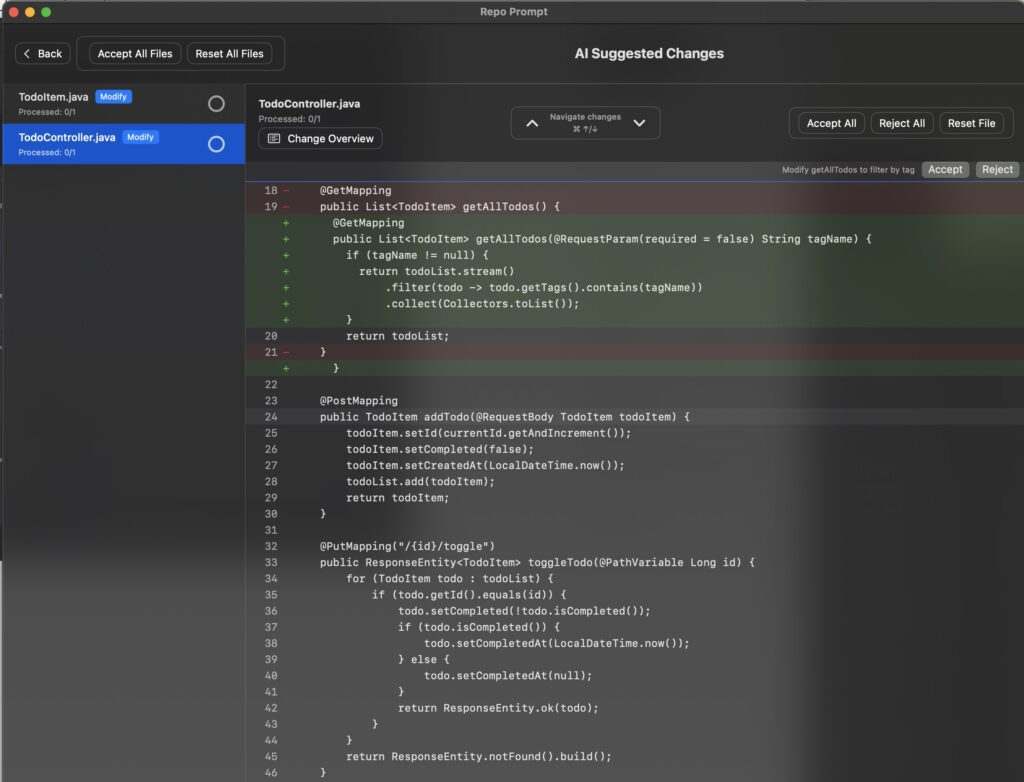

</file>Another idea is to use the Ollama formatting introduced recently, instead of including this in the prompt. However, this method may not be sufficient if the context is not sufficiently large. The result of using the above method would be:

I’m not particularly keen on prompt formatting because I already encountered an issue with it in my project (the model didn’t adhere strictly to the rules). Therefore, I’ll explore some provider solutions. However, for now, experimenting with the Prompt Repo and using a temporary solution will suffice as a workaround.

I’m one day after testing it, with a modified prompt also to better stick with the format, but the results are not good enough as compared to paid services. Maybe larger models can provide better responses, e.g. 70B. I’ll go back to the ChatGPT API or Claude, depending on what will be more cost-efficient for me, and wait for the upgrade of my Mac next year.